Several years ago, we introduced the concept of Fiat News on these pages.

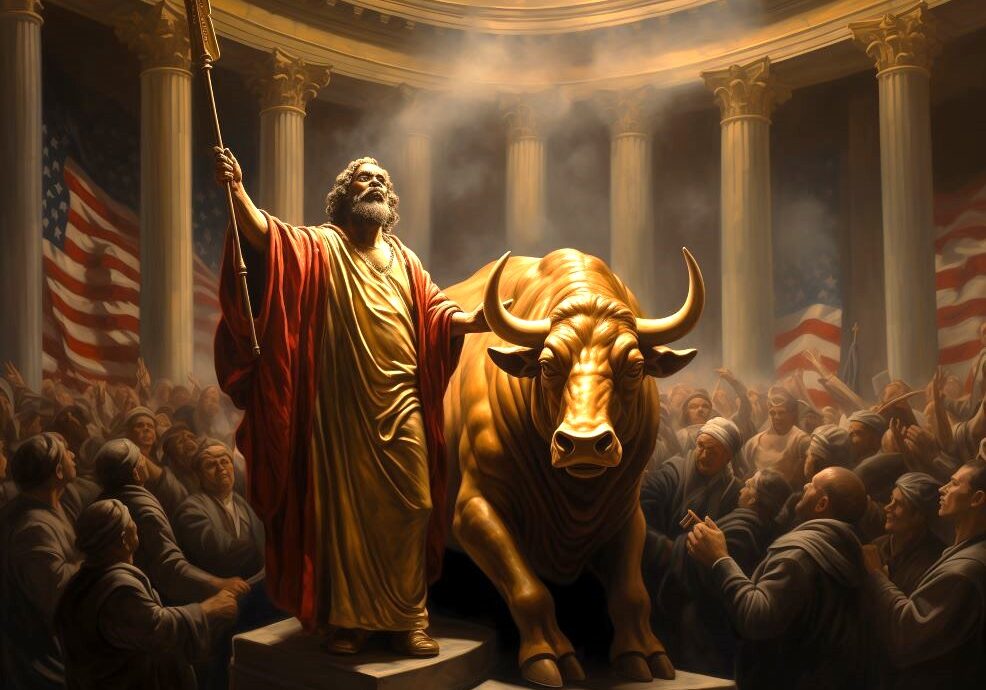

It is a simple idea. In the same way that money created by fiat debases real money, news created by fiat debases real news.

Although it misinforms, fiat news should not be understood as misinformation, at least in the colloquial sense. News which contains false information or distorted interpretations of facts can be better thought of as counterfeit news. Like counterfeit money, enough counterfeit news can debase the real thing, too. Yet even considering how widespread counterfeit news has become, fiat news exists on such a massive scale that its power to debase is in a different category. Nor is fiat news synonymous with bias. We think bias represents a causal explanation for a very specific kind of recurring fiat news.

Most “media watchdogs” are in the business of identifying one of those two things: misinformation or bias. The problem with these efforts, beyond that they do not capture the full scope of actions which debase the information content of news, is that it is practically impossible to report on misinformation or bias in a manner that is itself not colored by the opinions of the author, or else designed to shape how the reader interprets facts and events. While they may in some cases offer a useful service in the face of blatant lies published through politically invested news outlets, too often they become yet another source of fiat news.

Why? Because fiat news is the presentation of opinion as fact. Fiat news is news which is designed not to provide information for the reader to process, but to provide interpretations of information for the reader to adopt. Fiat news is the primary vector for Nudging, shaping Common Knowledge, or what everybody knows everybody knows. Fiat news is how governments, parties, corporations and other institutions in a free and always connected society meticulously shape it - then tell us that it was our idea.

By design, fiat news isn't always easy to spot. Outside of editorial pages, it is rare indeed that an expression of opinion as fact would include obvious phrases like “we believe” or “we think.” Instead, media outlets guide interpretations through more subtle means that may sometimes be as invisible to the author and editor as they are to the reader.

Several years ago, we also introduced what we called The Narrative Machine.

I have only begun to scratch the surface of this important piece, but as one who writes a paid-for newsletter I find there are a lot of red flags. Am I ascribing something inappropriately? Have I quoted somebody out of context? Do my facts fit? Will the reader be better informed after reading my stuff? Many places to look for shoes that fit. Thanks, Rusty.

Great starting point for a hugely important public discussion.

My point of reference is my analysis from 5-6 years ago of how Uber used these exact techniques to create massive Fiat Corporate Value (nearly $100 bn created out of thin air).

A couple of items you might consider adding to your list:

16: Ignore the Funding/Financial Interests of the Experts Interviewed (or the role of longstanding well-funded think tanks in political situations). Stories quote experts as if they are dispassionate analysts whose claims are backed by the kind of rigorous, peer-reviewed research you’d see in major academic settings and deliberately conceal are being paid large sums from the interests their claim supports. Tiny bits overlap with “Appeals to Authority” “Coverage Selection” and “Missionary Statements” but the overall problem here goes well beyond what you’ve included in those three items.

Only One Side of The story Gets Reported. Again, small bits included in other items, For years, MSM coverage of Uber exclusively reported that they were the greatest thing since sliced bread, and had succeeded because of cutting-edge technology that created huge productivity advantages. This also applies generally to MSM coverage of US overseas military actions in the last 25 years.

Never Any Attempt To Review the Bigger Picture. In isolation fiat news stories that simply repeat corporate or governmental claims are somewhat understandable given the pressures of the news cycle. But you never ever see a MSM media outlet step back after a number of years and examime whether the corporate or governmental claims uncritically published in the past actually turned out to be true. Was Uber actually the biggest thing since sliced bread? Did claims about weapons of mass destruction actually justify 20 years of war? Did those 20 years of war actually provide major benefits for the people of IRaq/Afghanistan?

Reject/Ignore Longstanding Measures of Bottom-Line Results. In 12 years Uber has lost $31 billion on its actual, ongoing taxi and delivery services and has yet to generate a single dollar of positive cash flow and no one can explain how it ever could produce sustainable profits. These are well-understood ways to measure corporate performance, but you won’t find a single MSM story that discusses them seriously. Even the Guardian’s recent Uber “expose” completely ignored competitive economics and financial results in order to focus on Stylistic/Cultural issues which provide a highly inaccurate picture of Uber’s performance. I’m sure everyone can quickly recall dozens of comparable issues in the coverage of military and political problems.

Maybe a couple of these could be subsumed in a modified version of your first 15 warning signs, but I’m guessing that list will end up expanding

Excellent. I’d appreciate explicit examples of each “face”. Maybe even in a separate doc? I think I get them all but real-world examples of each would help.

Which face is employed by that conclusion? I sense something dripping from it, but I don’t think it’s empathy.

Hi Roy. Always a good practice, but I’d say most newsletters fall squarely within the “obviously opinion” camp. While the rise in the aggregate volume of this kind of content is probably tipping the scales on the fiat news spectrum, I don’t know that it’s our primary concern. There should be a place for people to attempt to convince others in the various media; we’d argue that place is “where people know someone is seeking to convince them.” My guess is your newsletter falls squarely in that camp.

Hah! None whatsoever. I’m not a news outlet, and happy to assert some tendency toward self-aggrandization within certain professions. Couldn’t possibly be something that financial writers are guilty of, too.

Absolutely, Kevin! Definitely the plan over the next few pieces.

Thanks, Hubert! I think these are good ideas for checks for anyone who is thinking critically about a topic. Really good ideas, actually.

I also think the pitfalls of classifying them as fiat news are significant. In all three of these, you’re talking about framing through omission of one kind or another, which is absolutely a thing. I think you’re 100% on the right track and have zero disagreement with the specific examples. But framing through omission is a thing which presumes you can identify some objective baseline of “what someone should be covering” with respect to a particular topic. Not only am I not sure we can do that, I actively think that if I attempted to do it I would be programmatically introducing that unavoidably subjective assessment into the model. And I mean that both in the sense of “if I tried to do this personally in my own news consumption” and in the sense of “if I tried to incorporate this into a fixed fiat news model.”

And there are two answers to those two senses. To the former (i.e. our personal news consumption habits) I think it is very good to be aware of each of the ways mentioned by you that authors can frame through omission. I think it is also very good to be mindful that we are not doing question begging of our own. When we start doing the “but why aren’t you talking about” game, it is very often - or at least very often is for me, as I can’t speak for you - because we’ve already drawn some conclusions of our own and are just a bit miffed that they aren’t actively working to support our conclusions.

To the second sense (that is, modeling this more systematically), I presently think that the best way to track this is through the Coverage Selection face of fiat news. What we can model is the extent to which topics (e.g. actual financial results) are covered for one entity (e.g. your average S&P stock) at rates which they are not for another (e.g. Uber). We can model and show how that differs among outlets. We can show how it differs over time. We model topics and entities like this all the time, so this is a pretty vanilla part of what we’d potentially be looking at for Coverage Selection.

I think that’s ultimately going to get, say, 60% of what you’re talking about, which means I’m probably leaving 40% on the table to avoid false positives. Knowing that, how do you think we could improve on that to better capture the very real things you note without injecting too much subjectivity about baseline expectations of “proper” coverage ourselves?

Mapping out Content Context has to involve some mechanism that reads the headline(s) and compares them to the words used to promote the story on social media, specifically Twitter. Any revisions to the social media promotion–deleting of a tweet and replacing it with something more neutral, say–would provide another data point from which the magical algorithm (and to me it is indistinguishable from magic) that could indicate its fiat newsy-ness.

I’m picturing something simple like a point system where 0 is pure news and 1 is pure opinion, and each ‘face’ adds some weighted amount of points to the total score. I’m thinking this way because of course I have no bloody idea how the Hell NLP works or how this project is going to judge these things. That’s multiple levels above my comprehension. But in thinking broadly about how to judge context, which is by nature subjective, I would at least in concept try to assign a value to certain actions. So deleting a clickbait tweet and replacing it with something neutral would be worth 0.1 or whatever. (For comparison an article that is written from the first person and has a lot of ‘I believe’ or ‘it’s time for us to do X’ would score 1.0 right off the bat) You have to figure out how to assign a value to not just the words used but the actions around those words. Does the headline correspond with the actual language of the piece? Does the headline have someone’s name in it but that person is only mentioned once in a 700 word story? If so is that meaningful? If it is then why? If it isn’t then why not?

I will add, along a different line here, that Unsourced Attribution is probably the most dangerous of the 15 faces. That stories are believed ab initio even though nobody has put their name to them is frightening. We skipped past the ‘trust but verify’ model and went right into ‘because some guy who totally exists said so’. The media is obsessed with telling us that no, we wouldn’t know their girlfriend, she goes to another school. Maybe we don’t care right now because those types of stories are only hurting the Bad People , but one day they’ll move on and something or someone you care about will be next.

, but one day they’ll move on and something or someone you care about will be next.

I want to suggest a fiat news tactic I don’t think is covered by those here, forgive me if you think it is. I call it “speculation anchoring,” although it may have another name.

This is when an article suggest causality or connection between two things in its headline or in its opening paragraphs, but then goes on to say, wait, actually, there is no real evidence of this and the connection is purely speculative. Because a lot of readers won’t read carefully past the headline or the first paragraphs, the purported connection is established in the reader’s mind, due to the fact that an article ostensibly asserting the connection was published. What they will remember is, “I saw an article a while back saying X…” and will forget the details about evidence or lack of it. In this way the reader’s mind is “anchored” toward a false or weakly evidenced belief, even as the article may not explicitly argue for that belief. The reader thinks, “X is happening”, when in fact it is only someone speculating about X. I have sometimes even seen that the article will acknowledge evidence that directly refutes X, leaving it a mystery as to why the article was written - unless it were trying to establish a narrative rather than illuminate fact.

This technique is everywhere but I see it I think it is most rampant in articles about climate change - something weird is happening with the weather or in the natural environment. “Scientists think” this could be due to climate change. Read to see that there is no evidence that climate change has anything to do with it. Yet for some reason, you are still left with the impression, this is a climate change thing.

Here is a recent example about shark attacks on the rise recently on the East Coast, claiming in the subheadline that “Climate change may play a factor in sharks venturing closer to shore.” There is heading that reads “Global warming may play a factor.” The first sentence of the paragraph however, admits that “there is no data” to support this claim at all. Nevertheless, we still see several paragraphs of pure speculation on the topic, which includes implicit appeals to authority because the speculation is coming from “experts.” Even though there are more paragraphs establishing the numerous other possible causes, “Scientists have an explanation” according to the headline, but the article actually establishes that they DON’T have an explanation.

The article even goes on to say, there might not even be more sharks in the water, but just more people, conceding that even the apparent trend in shark behavior could be completely illusory. Moreover, anybody who has even semi-regular interaction with nature knows that wild animals sometimes deviate from the patterns we expect for no clear reason. But now there’s an article out there propagating the idea that “sharks are changing their behaviors because of climate change.”