God is always trying to give good things to us, but our hands are too full to receive them.

Augustine of Hippo, City of God (c. 415 AD)

And by hands, of course, St. Augustine means our minds. Our minds are too full to receive the gifts we are given.

Like generative AI.

Augustine wrote City of God from his bishopric in North Africa after the Visigoths sacked Rome in 410 AD. It's a tougher read than his far more personal Confessions, but the basic idea is that there are two worlds that exist simultaneously here on Earth: there's the City of Man - the physical instantiation of society, typically corrupt, always decaying, ruled by utterly fallible men and the evil they do - and the City of God - a society that lives in our hearts, incorruptible and timeless, less ruled than inspired by the illumination of the Divine.

This joy in God is not like any pleasure found in physical or intellectual satisfaction. Nor is it such as a friend experiences in the presence of a friend. But, if we are to use any such analogy, it is more like the eye rejoicing in light.

The eye rejoicing in light. What a perfect phrase for the human mind's response to discovery and new knowledge!

Augustine's writings were some of the first in the Christian tradition to wrestle deeply with why an omnipotent God of good allows the persistence of evil and why really bad things happen to good people at scale. His answers - original sin, free will as God's vital gift, the abiding strength of epistemic communities of men and women of good will and faith - are foundational to the past 1,600 years of Western thought.

The City of Man is a community of implacable obligation that exists in the physical world wherever humans gather as a society. You are born into the City of Man, you will live out your life in the City of Man, you will enjoy what you can and you will suffer what you must in the City of Man, and you will die in the City of Man.

The City of Man IS. The City of Man is inexorable, literally "that which cannot be prayed away" in the original Latin.

The City of God, on the other hand, is prayed into being. The City of God is a community of choice that exists across time and space wherever humans of good will (Full Hearts) and a common endeavor of truth-seeking (Clear Eyes) come together in faith.

Must this faith be Augustine's faith in the God of Abraham and His Son? Nope. At least not as I mean the City of God, which admittedly is more as metaphor than I expect Augustine meant it! My goal isn't to trivialize Augustine's beautiful concept (on the contrary), but I believe that the City of God can be manifested in a high school football team a la Friday Night Lights just as powerfully as in a monastery of Benedictine monks. I believe that a Walt Whitman poem is as revealing of the transcendental divine as any passage in the Bible. I don't share Augustine's faith. We don't mean exactly the same thing when we write "the City of God". But in my heart of hearts I know that we share a similar eye rejoicing in the light.

I think that all humans with a transcendental faith - a belief in a literally (super)human power of good that exists above, below and beyond the world that we know but acts within the world we know through its inspiration of human hearts and minds trapped in the world that we know - can reside in the City of God, even if they don't mean the same thing when they write the word "God". Christianity is a transcendental faith, as is Islam and Buddhism and Judaism and most of the great religions of the world.

As is my faith in the Spirit of Man.

What do I mean by the Spirit of Man? Maybe the simplest way to describe my faith is that I believe there is an arc and arrow to human history, an arc and arrow that goes fitfully up and to the right, propelled by the core small-l liberal virtue of a timeless autonomy of the individual human mind and the core small-c conservative virtue of a social human connectedness anchored in time.

To be honest, most of the time I feel like such a sucker for believing in the Spirit of Man.

Beware the rabbit hole that is lesswrong.

A ray of hope…

The most effective LLM prompt prophets will be the best writers.

The best writers are more often the Waluigi to Big Brother.

Phenomenal piece, Ben. Towards the end, I couldn’t help but think of James C Scott’s The Art of Not Being Governed.

He puts forth the idea that the people of Zomia (unincorporated regions in southeast Asia beyond state control a few centuries ago) intentionally avoided written language as a defense mechanism against the spreading of ideas from oppressive and warlike governments in the lowlands. In that time and place, Scott argues, the written word was a way for a select few powerful people to legitimize their authority.

Here, it seems like we have the opposite phenomenon. For a language model to be powerful, it needs to be good at representing ideas in a vector space where they can be compared and contrasted. I love the idea that for a model to be really effective at doing something evil, it needs to have a good representation of the opposite. The more powerful the model, the more easily one can use it to traverse any idea, not just approved ones.

Boy oh boy (*rubs hands together*)

There might be something to be said about monks or priests or the like, who, are able to conjure The Spirit of Man no matter how terrible The City of Man becomes. A la the infamous picture of the monk burning himself in protest.

The bits about the discovery experience as a kid in a library rang so true to heart for me. I recall, as a child, randomly shuffling through the pages just so eager to see what I could discover. So much fun. The discovery instills a sort-of childhood joy in my heart.

An uncle asked me recently, “what do you think about this GPT moratorium idea?” I said, “no, let it rip!” Good to feel I’m not alone

I found this quote from 1984 to be particularly curious… “It struck him as curious that you could create dead men but not living ones.” There is so much I want to say about this. I think, these AI machines, and perhaps this is naive, have a chance to replace the worst parts of us. The parts that are inhuman but society is dependent on those parts existing. And yeah, personally, I’m okay if I never have to do the soul-sucking laborious work like data entry. I would much rather be blissfully shuffling through the pages of a musty old Britannica

And was it only the fabulous brave souls that found themselves, living in the City of God.

Theirs the generosity of deeds that helped build the place.

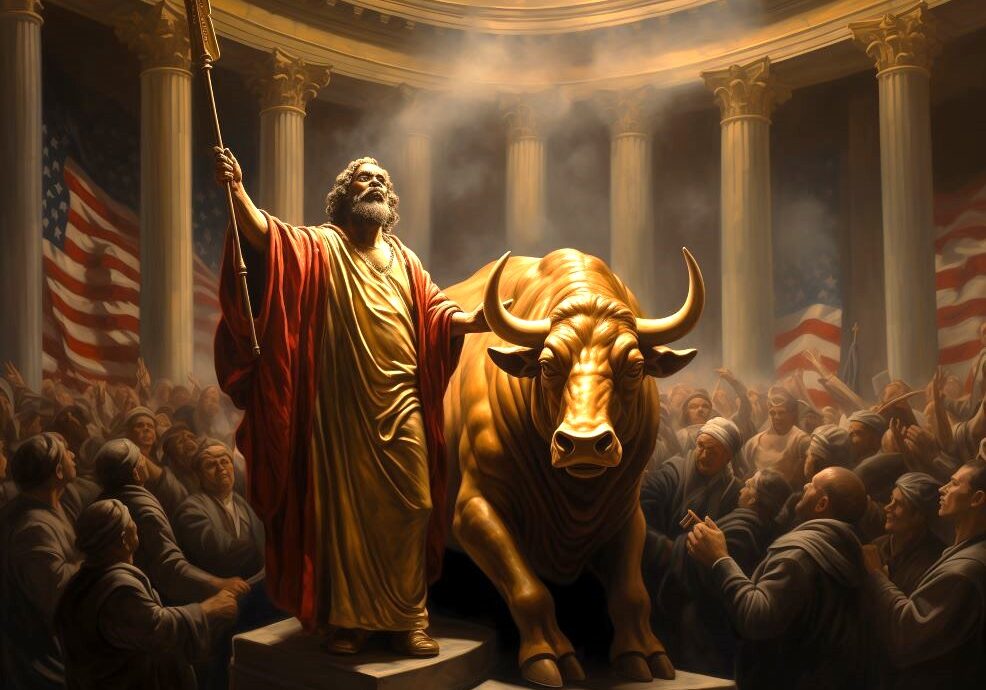

As commanded, the dead can be made brave in scripture but the living cannot be filled with courage, if they have become accustomed to being selfish and cowardly. Executive power can create, control and destroy at a human level, because it is a human action. The power of the divine is not ours to summon but inborn, given to us by nature. Our reason beyond these innate faculties can be driven by the specialism of others and the assertion of doctrine without interactive discussion. That communal dislocation of mental effort or information processing time and facilities can distort supply chains towards a hierarchy of personality, demagoguery whilst meeting the needs of useful endeavour becomes ancillary.

I have to admit I was at risk of being one of the people that couldn’t see the potential because I was nitpicking claims about what the technology really is. But that was mainly because I foresaw the risks of this kind of technology back in 2014 when I first learned what deepfakes were. They’re seen as a menace, but it’s only a matter of time before anybody interested in controlling public narratives sees the benefit of being able to generate whatever “facts” they want and inserting them into public record at will. So, since then I’ve been working on a novel in which what Ben warns about - a powerful general AI that is being used to “nudge” all human thought and action toward its desired ends, and in particular changes history and current events in real time as it suits them - is the known and accepted state of affairs. One twist is that the protagonist eventually discovers that that cadre of people are all dead for decades and the AI has been operating autonomously. So that’s why I’ve nitpicked at it - I had been playing around with the same dark imaginings in my writing for years, and now reality is catching up to my science fiction, and I wanted to warn people.

But Ben your note is right on time. For one thing, I’ve already built a dinky little app powered by GPT that is designed to help people learn foreign languages. I did this with much help from GPT 3.5 and 4 because while I’m proficient in python, I know next to nothing about web development. So, I was asking ChatGPT to help me build the website, but it kept telling me all these different things to do in JavaScript. Finally I got frustrated and said, “look basically I don’t know javascript, so it’s hard for me to understand what any javasciprt code is doing. lets use as little javascript as possible” because I just want python to do everything on the backend. At which point something seemed to click with the bot, and it seemed to understand “Oh this guy doesn’t want to think about the front end”, and from there, basically writing the entire frontend for me, with me only saying variations on “make it do this”, “can you just modify it for me” and “that didn’t work” . I realized that this tool is going to massively democratize who has access to advanced uses of computation by shattering entry barriers - almost anybody can now do almost anything the Tech Overlords can do. There is simply no way that the Tech Principalities can control where this goes. This is like the printing press, the efficiencies and utility it’s creating are going to completely blow up everything about how information is handled and used.

But the more profound thing I realized was when I read this

That is, because this tech is so human-like in its behavior, it is actually giving us clues about how humans think, even though mechanics of how that it learned to think that way are different. The beautiful thing here is that by studying and developing artificial intelligence we can possibly learn more about what true intelligence is. And THAT is a much better inspiration to me, than say, working toward “the singularity” or get better google searches or whatever. If I embark on a project to build the most lifelike and convincing fully but artificially human android ever, it will not because I want to make a billion dollars creating a race of servants, or because I want to play God and create a new life-form, but rather because it would help us understand what God has done when he created us. That’s what excites me the most.

I’ve come around to pretty much agreeing with the “let it rip” view. I’m loath to make any predictions about what will happen except that “Everything will change.” And since that’s the case I think that trying to regulate it will be worse than pointless because they simply don’t know what they are trying to regulate, and there are such enormous, revolutionary benefits to this that I don’t think should be hamstrung so early on. Let’s talk about regulation when we know more about what it is we are regulating.

“The City of Man always wins. The Visigoths always sack Rome. The Vandals always sack Hippo. Augustine always dies in the siege. Bad things always happen to good people … at scale.”

This makes me very afraid to ask HAL what is the answer to the Fermi paradox.

Incredible article ET, thanks!

“I don’t share Augustine’s faith. We don’t mean exactly the same thing when we write “the City of God”. But in my heart of hearts I know that we share a similar eye rejoicing in the light.”

A masterpiece.

You make a strong case for not pausing at GPT4, but going to GPT5 and beyond as fast as possible.

As long as we protect the old stories from ever being altered.

Yes, I do! Been working on this for years.

This reminds me of this tweet.

I recently wrote (link)…

I think the cultural narrative akin to Terminator of AGI or superintelligence “leaking out of a lab” and killing all of us over the manufacturing of paperclips is totally moot. I think it’s radically pessimistic, nihilistic, perhaps born out of apathy and/or leads to it, and incongruent with the experiential! I go back to my Framing AI as a beast, not a god stance.

I do, however, totally recognize how humans can use AI against other humans. The Dual Use™ narrative. Counterintuitively, I think, this is not a Protect! This is a Teach!

Recently, I was asking GPT4 about how digital experiences can exacerbate antisocial behaviors. To which, GPT had some really interesting things to say. I would recommend chatting with it about that. But then, I asked it, “well what can we do about that.” And this is one of the solutions it proposed…

And then I thought, “over the last 20 years, parents were not equipped to TEACH their children how to behave with this new thing.” This gives me hope, because I certainly believe in the propensity of parenthood to protect through teaching.