AI R Us

To learn more about Epsilon Theory and be notified when we release new content sign up here. You’ll receive an email every week and your information will never be shared with anyone else.

Continue the discussion at the Epsilon Theory Forum

266 more replies

The Latest From Epsilon Theory

This commentary is being provided to you as general information only and should not be taken as investment advice. The opinions expressed in these materials represent the personal views of the author(s). It is not investment research or a research recommendation, as it does not constitute substantive research or analysis. Any action that you take as a result of information contained in this document is ultimately your responsibility. Epsilon Theory will not accept liability for any loss or damage, including without limitation to any loss of profit, which may arise directly or indirectly from use of or reliance on such information. Consult your investment advisor before making any investment decisions. It must be noted, that no one can accurately predict the future of the market with certainty or guarantee future investment performance. Past performance is not a guarantee of future results.

Statements in this communication are forward-looking statements. The forward-looking statements and other views expressed herein are as of the date of this publication. Actual future results or occurrences may differ significantly from those anticipated in any forward-looking statements, and there is no guarantee that any predictions will come to pass. The views expressed herein are subject to change at any time, due to numerous market and other factors. Epsilon Theory disclaims any obligation to update publicly or revise any forward-looking statements or views expressed herein. This information is neither an offer to sell nor a solicitation of any offer to buy any securities. This commentary has been prepared without regard to the individual financial circumstances and objectives of persons who receive it. Epsilon Theory recommends that investors independently evaluate particular investments and strategies, and encourages investors to seek the advice of a financial advisor. The appropriateness of a particular investment or strategy will depend on an investor’s individual circumstances and objectives.

A good read, I agree with the concept that the Nudging State and Oligarchs will move us to a set of specific strictures through these tools, within which we will come to understand how to exist. They are searching for this capability today. I wonder if that is the end point for the evolution of us as a species. We will have fulfilled a destiny, not one I want, but one that as a species we will have in effect engineered for ourselves. Where we are all in stasis, living as we are told we should be. That to me seems an end to our evolutionary path.

I still say beware narratives of inevitability or infallibility. The real world is too complex to model, whether it’s contextualized or not. AND your point stands when we turn around and look at the meat in the mirror and how this human family story actually works in practice! I surprised myself by laughing at a joke during the 10 minutes I watched “Nothing, Forever” albeit mostly because I wasn’t expecting it than its funny factor. (Why did the chicken attend a seance? To get to the other side.)

I was just cleaning up my desktop and came across this snapshot I took during a talk by futurist Gerd Leonhard which seems apt.

This may end up being the most underrated piece you ever do, Ben. It is surely the densest in terms of the themes that serve as its scaffolding. A person who’s never read ET doesn’t get this… you’re just Grandpa Simpson shouting at a cloud, One needs to have read roughly two dozen other ET articles to merely grasp the concepts here, and another handful to understand why it’s terrifying. It’s what allows you to write such a brief note, except that even its brevity works against you in our modern times of Content!™, where everybody knows that everybody knows that short articles are just part of the dopamine hit to be skimmed for the punchline, like some sort of mental donut that gives us a jolt then we head back to the couch for another CNNfoxnewstmzlinkedinboredpanda scroll,

Winston loved BB. Ten Minutes Hate. Ten Minutes Love. Not much difference is there…

Well done. Best thing I will have probably read this entire year. Certainly on a per word basis.

It’s possible that Ben may have read this at some point, but I assure you the proximate influence was an extended late-night conversation in our D&D group Slack channel.

I’ve never read that sleep/dreams article (although I will with great interest), and yes, Rusty is right about the late night D&D Slack channel. In fact, I woke up from a dream and scribbled those ideas (and a lot more besides) onto Slack!

Thanks for the kind words, JD, and you’re not wrong about the need for other ET notes to serve as a scaffolding here. Fortunately it is (I think) a single scaffolding, or at least a set of related structures that we’ve built here, and it’s why I think more and more about writing in a different form factor (non-fiction book? scifi trilogy?) to present all this in a more coherent whole.

That dream theory makes me wonder about imagination in general, which might be another kind of overfitting check done while awake, and whether there might be a relationship between imagination capacity and intense dream capacity.

Yet again Ben you put out a wonderful piece of thinking expressed eloquently.

My first reaction is that you are wrong for at least two reasons:

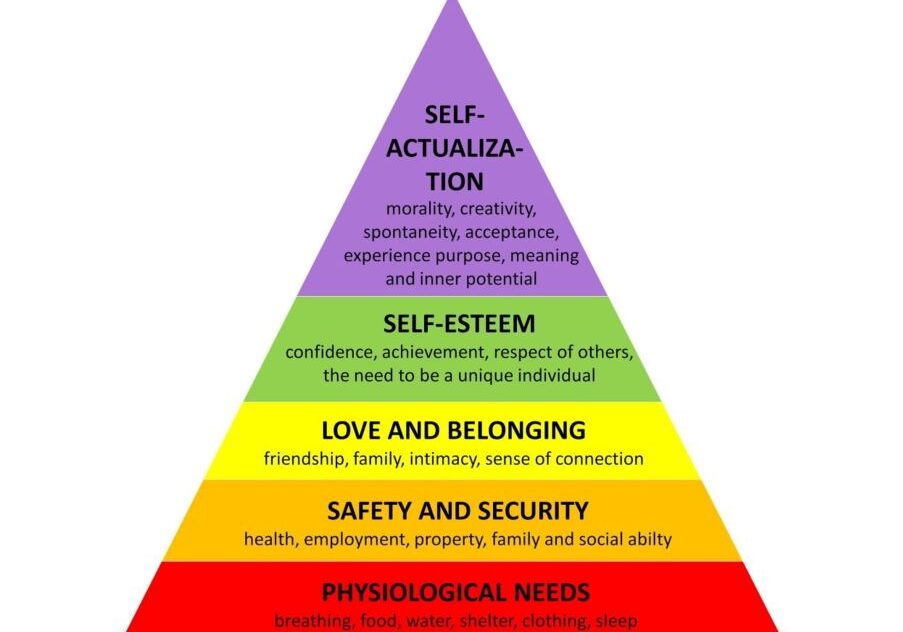

I have a body. I have experienced pain and pleasure. I experience terror. I experience fear of death. These experiences, like the feeling of diving into a swimming pool or feeling the sun’s warmth on my face, will never be experienced by a large language model. This part of my training did not come from language. ML will never be like me.

I am not physical. I am inhabiting a temporary body and a mind, but I am consciousness. I Am. I have been to other realms with the help of a shaman and her tea. I am something fundamentally beyond the trained neurons in my brain. And so are you. ML will never be conscious. Just like my toaster and my lawn mover, ChatGPT is an apparatus that will never have an experience. ML will never be me. (And there is a chance that I am completely wrong about 2).

ML may still be the most dangerous political tool ever invented, and I still congratulate you on a very thought provoking piece of writing.

One thing we should do is to make it illegal to anthropomorphise ML. No cute names like Alexa. Voice should be clearly non-human mechanical. Its pronouns are “it”, “it”, and “it”. ML shall have no human characteristics that could lead to confusion, which is why it must be “it” and not “he” or “she” etc. ML shall have no rights, just like my lawn mower and my toaster.

I believe that fundamental human rights, the ones we think of as unalienable in our national mythology in the US, should not be extended to any creations of man–including corporations!

Seriously. A distillation of so much of the content we have rightly crowdsourced and trained ourselves on here at ET.

“You will own nothing, and you’ll be happy”, including much of the choice-making and context that now surrounds us.

The only thing inevitable is change, and what looks like progress to the Nudging State is essentially a managed entropy of the middle of the bell curve of shared content. Collective Meat Intelligence directed by a fallible but constantly retraining and retrained/managed narrative is all that’s needed. GPTx will most certainly be digesting the mass of text written to object to it and evolving its response.

Personally, I think there are ample postmodern examples of nudged narratives that have now been broadly absorbed if not actually believed or accepted, and are now or have directed policy. Most didn’t require proof at the level of 2+2=4.

Perhaps it’s recency bias, but I just re-read Dan Gilbert. His Spinozan conclusion combined with that dopamine jolt is pretty powerful one-two punch those on the couch.

“The Gilbert experiment supports a disturbing conclusion-- when given information, one tends to believe it’s true. Thus, people are vulnerable to being easily deceived.”

You don’t have to fool all the people all of the time, or even use the words in the context they expect.